Language Guided Monte Carlo Tree Search (LGMCTS)

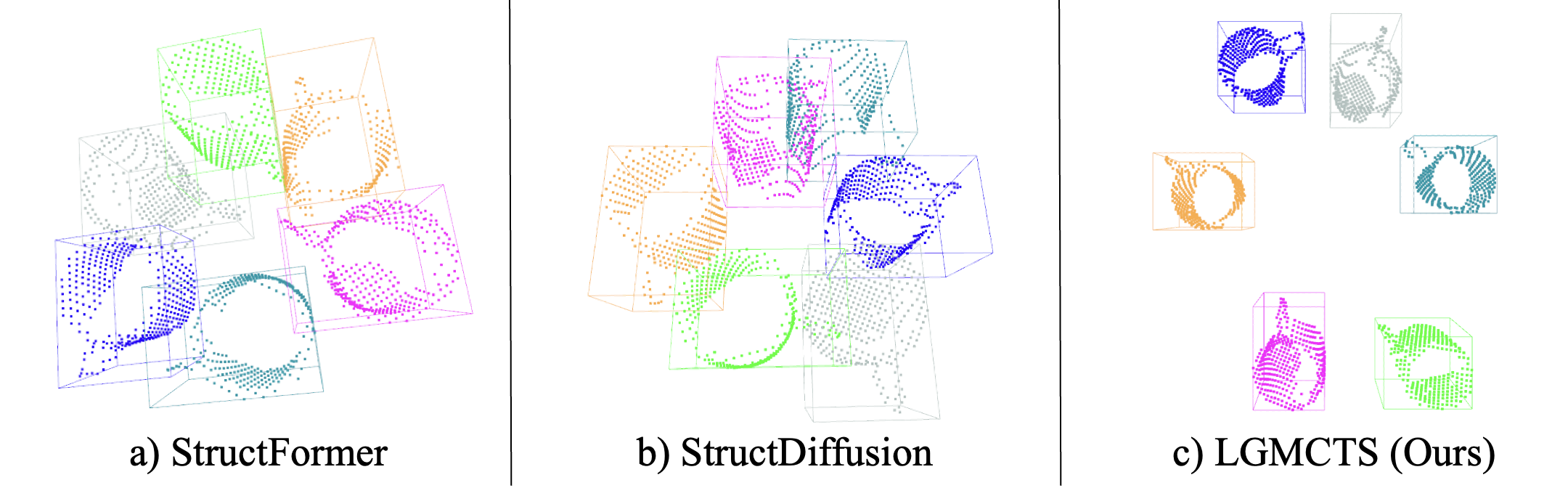

We introduce the LGMCTS technique, crafted for executable semantic object rearrangement. LGMCTS employs LLMs to process free-form language, perceiving object placements as probability distributions rather than fixed points. The problem is viewed as sequential sampling, where language and the present scene influence each object's pose distribution. This method accommodates distracting objects, ensuring plans satisfy linguistic specifications while being executable.

Key Contributions

1. A method that simultaneously tackles semantic rearrangement goal generation and action planning.

2. A solution promoting zero-shot multi-pattern composition in semantic object rearrangement.

3. The establishment of a new benchmark (ELGR) specifically for executable semantic object rearrangement that highlights the drawbacks of existing LLM-driven solutions and other Learning based semantic rearrangement methods.

Distribution Generation

This illustrates the (x, y) prior for the 'line' pattern. Sequence from left to right represents stages K = 0 to K = 3. White stars indicate sampled poses. At K = 0, poses can be placed freely. At K = 1, poses are sampled outside a specified circle. For K ≥ 2, all poses align with the line formed by the initial two poses.

For each object, we compute a conditional distribution using two components: a pattern prior function and a boolean function indicating free space.

The pattern prior function is selected from a database using Sentence-BERT embeddings of given language instructions, matching the closest predefined function.

Within the LGMCTS context, patterns are described using parametric curves.

About the sequential sampling process

1. When no objects are sampled, the first object can be placed randomly.2. With one object sampled, the next must maintain a minimum distance from the first.

3. From the third object onwards, they are placed based on the parametric curve's gradient angle and some variance.

Patterns are categorized as ordered or unordered based on whether a specific sequence is required. The distribution generation process is illustrated with a lines pattern in the accompanying figure above.